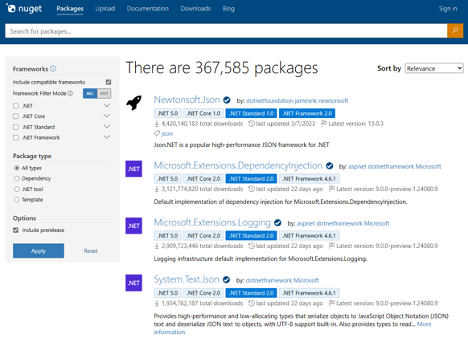

Refining Your Search: Introducing NuGet.org’s Compatible Framework Filters

Announcing NuGet 6.9

Introducing NuGetSolver: A Powerful Tool for Resolving NuGet Dependency Conflicts in Visual Studio

Announcing NuGet.exe and NuGet Client SDK Packages Support Policy: Keeping You Informed and Secure

Announcing NuGet 6.8 – Maintaining Security with Ease

HTTPS Everywhere Update

Announcing NuGet 6.7 – Keeping You Secure

The Microsoft author-signing certificate will be updated as soon as August 14th, 2023

Light

Light Dark

Dark